Evolving the AI Agent Spectrum: From Software to Embodied AI

In the piece Agents in the AI-First Company I introduced a five-level spectrum for understanding software agents. The piece generated a strong response and valuable comments, for both of which I’m incredibly grateful.

Some readers questioned the original progression of the spectrum. They noted that developing agents that can collaborate based on pre-programmed rules is a distinct, and often simpler, challenge compared to creating a single agent that can truly learn and evolve on its own. Their argument was persuasive: we will likely develop and deploy systems of self-coordinating agents before mastering truly autonomous learning agents.

This insight doesn’t just swap two levels; it highlights the need for a more nuanced framework. Based on these discussions, I’ve added an extra level to the original spectrum to represent the real-world development of AI capabilities better.

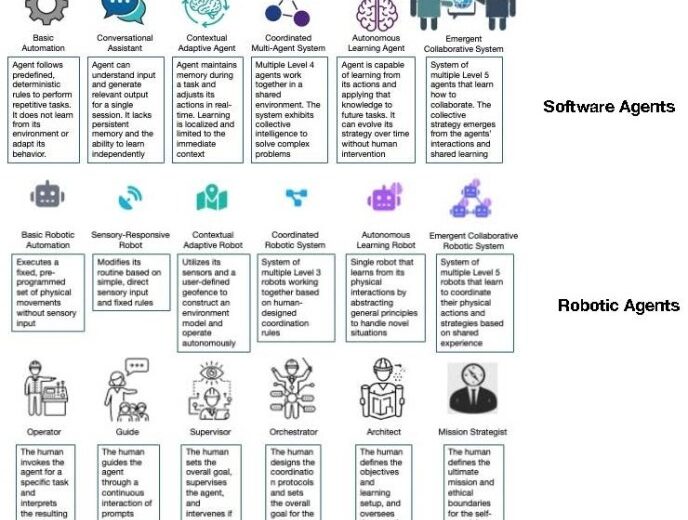

An Updated 6-Level Agent Spectrum

The refined spectrum now distinguishes between systems of coordinating agents (Level 4) and single autonomous learning agents (Level 5), and assigns collaborating learning agents to a new Level 6, thereby creating a more logical and robust progression.

- Level 1: Basic Automation. The agent follows predefined, deterministic rules to perform repetitive tasks. It does not learn from its environment or adapt its behavior.

- Example: A UiPath Robot executing a pre-defined workflow to process invoices.

- Level 2: Interactive Agent. The agent can understand input and generate relevant output for a single session. It lacks persistent memory and the ability to learn independently.

- Example: A basic FAQ chatbot on a website that responds to specific keywords without remembering the conversation.

- Level 3: Contextual Adaptive Agent. The agent maintains memory during a task and adjusts its actions in real-time. Its adaptation is situational and does not create general knowledge.

- Example: A chatbot like Gemini or ChatGPT that uses the history of the current conversation to provide a coherent, multi-turn dialogue.

- Level 4: Coordinated Multi-Agent System. A system of multiple Level 3 agents that work together based on pre-programmed, human-designed rules and communication protocols.

- Example: A Zapier- or MCP-based workflow where a new email in Gmail triggers agents to save an attachment to Dropbox and send a notification to Slack.

- Level 5: Autonomous Learning Agent. The agent learns from its actions by abstracting general principles from its experiences, allowing it to act effectively in novel situations and evolve its strategy over time.

- Example: DeepMind’s AlphaGo, which learned to play Go at a superhuman level by developing novel strategies through self-play.

- Level 6: Emergent Collaborative System. A system of multiple Level 5 agents that learn how to collaborate. The system’s collective strategy emerges from the agents’ interactions and shared learning.

- Example: Google DeepMind’s Composable Reasoning research, where multiple LLM agents learn to decompose complex problems and form dynamic teams to solve them.

From Software to the Physical World: The Embodied AI Spectrum

Embodied AI refers to intelligent agents that possess a physical body. This allows them to perceive, reason about, and interact directly with the physical world. Unlike purely software-based AI agents, the intelligence of these agents is shaped by their physical experiences and sensory feedback. The agent spectrum extends naturally beyond software to the world of robotics.

- Level 1: Basic Robotic Automation. Executes a fixed, pre-programmed set of physical movements without sensory input.

- Example: A CNC machine cutting a piece of metal.

- Level 2: Sensory-Responsive Robot. Modifies its routine based on simple, direct sensory input according to fixed rules.

- Example: An intelligent traffic light that adjusts its timing based on real-time data from sensors placed at an intersection.

- Level 3: Contextual Adaptive Robot. Utilizes its sensors and a user-defined geofence to construct a model of its environment, enabling it to operate autonomously and complete a specific task.

- Example: A robotic vacuum cleaner operating in a house, or a hospital robot delivering medicine to different departments.

- Level 4: Coordinated Robotic System. A system of multiple Level 3 robots working together based on human-designed coordination rules.

- Example: A warehouse robot fleet following centrally managed traffic rules.

- Level 5: Autonomous Learning Robot. A single robot that learns from its physical interactions by abstracting general principles (e.g., about physics, navigation) to handle novel situations.

- Example: A Waymo autonomous vehicle, whose driving model is continuously improved based on the collective experience of the entire fleet.

- Level 6: Emergent Collaborative Robotic System. A system of multiple Level 5 learning robots that learn to coordinate their physical actions and strategies collectively as a result of their shared experience.

- Example: A search-and-rescue drone swarm that learns the most effective patterns to accomplish relevant goals as a group.

The updated agent spectrum is shown below

The Crucial Leap: From Copying Context to True Learning

A critical distinction defines the jump from the lower levels to the higher ones. Agents at Level 3 are masters of situational adaptation. They can “copy” the context of a specific task, e.g., a robotic vacuum cleaner mapping a room, but they don’t learn from each such experience. If the context isn’t a near-exact match in the future, the experience is of little use.

The revolutionary leap at Level 5 is the ability to abstract over context. While the ultimate goal is for agents to learn and generalize underlying principles through methods like deep reinforcement learning, the path to this level of autonomy is not all or nothing. We are likely to see significant near-term progress from agents that become exceptionally skilled at drawing from vast contextual histories to handle new situations, especially rare edge cases. This sophisticated form of pattern matching is a critical stepping stone, but the true paradigm shift remains the move from finding near-matches to developing genuine, abstract understanding.

A Note on Learning Architectures: Homogeneous vs. Personalized Models

The discussion of learning agents (Levels 5 and 6) brings up a critical design choice: how is the learning managed across many users or units? The answer depends entirely on the use case.

- Homogeneous Fleets: For systems like Waymo’s autonomous vehicles or a factory’s fleet of autonomous robots, consistency and predictability are paramount. Here, every agent runs the same, centrally updated model. The agent’s capability is defined by the entire system, the vehicle collecting experiences, and the data center that processes those experiences to improve the models used by the fleet. The goal is to create a single, highly optimized “brain” that is deployed in increasingly complex environments, ensuring every unit behaves identically and benefits from the collective learning of the entire fleet.

- Personalized Ecosystems: In other scenarios, such as a personal software assistant, uniformity is undesirable. Each user has a unique context, private data, and specific needs. Here, each agent must be trained or fine-tuned differently. This introduces privacy and data containerization challenges, which are being solved by advanced methods like Federated Learning. This technique allows a global model to learn from the collective experience of all users without ever accessing their private data, enabling both personalization and shared intelligence.

This distinction between uniform and personalized learning architectures is a crucial factor in the real-world deployment of advanced agents.

The Next Dimension: Human-Agent Teaming

So far, our framework has focused on the capabilities of agents and their relationship with a single human user. However, the future of work involves the increasing collaboration between humans and AI agents. This prospect raises a crucial question: What happens when teams of humans collaborate with agents to achieve a shared goal? This introduces a new dimension of complexity.

- Shared Agent (One-to-Many): In this model, a single agent instance serves an entire team. The agent must not only understand the task but also the team’s social dynamics, manage shared context, and potentially mediate conflicting human inputs. This requires a high degree of contextual awareness, likely demanding Level 5 capabilities.

- Individual Agents (Many-to-Many): Each team member has their own agent. This leads to two distinct and powerful sub-cases:

- Homogeneous Teaming: Every team member uses the same type of agent (e.g., everyone has a “writing agent”). This creates an immediate need for the agents to coordinate, driving the development of Level 4 (programmed) and Level 6 (learned) collaboration.

- Heterogeneous Teaming: Team members use different, specialized agents (e.g., a writer with a “writing agent,” an editor with an “editing agent,” and a researcher with a “fact-checking agent”). This represents a true division of labor among the agents themselves, requiring them to be aware of each other’s roles and seamlessly hand off tasks. This is the ultimate vision for a Level 6 emergent collaborative system, creating a dynamic, self-optimizing digital assembly line.

Understanding these teaming architectures is critical. The future of productivity will be defined not just by the power of individual agents, but by how they are woven into the fabric of human collaboration. The development of standardized communication protocols, such as Google’s Agent-to-Agent (A2A) protocol or Anthropic’s Model Context Protocol (MCP), will be a critical accelerator for creating these robust, heterogeneous agent teams at scale.

A New Path to Advanced Embodied AI: The Rise of Foundation Models

Recent breakthroughs from Google, the Toyota Research Institute, and others demonstrate a revolutionary new method for creating advanced physical agents. This approach uses foundation models as the “brain” for a robot.

This development does not change the agent spectrum. Instead, it provides a powerful new pathway to achieving Level 5 capabilities. By pre-loading a robot with a foundation model, we give it a “common sense” understanding of the world. It doesn’t need to learn what an “apple” is from scratch; it inherits that abstract knowledge. As a result, the robot’s training can focus on connecting this vast knowledge to physical actions. This method is a massive accelerator for creating Level 5 agents that can generalize and act effectively in novel situations, perfectly aligning with the core definition of that level.

Leave a Reply